I have done absolutely nothing related to this year's recruiting classes and the Signing Day that came and went over a month ago. This is not because I have zero interest in recruiting; it's simply because there's simply so much going on that trying to follow it from a national perspective is pointless. It's not possible. I also have little desire to follow the whims of 17-year-olds.

I have done absolutely nothing related to this year's recruiting classes and the Signing Day that came and went over a month ago. This is not because I have zero interest in recruiting; it's simply because there's simply so much going on that trying to follow it from a national perspective is pointless. It's not possible. I also have little desire to follow the whims of 17-year-olds.What is definitely NOT the reason for my lack of coverage is the stupid misconception that permeates message boards everywhere that recruiting doesn't matter because of West Virginia and Boise State and (insert name of two-star guy who turned out awesome). The anecdotal arguments are everywhere and ignore the numerical evidence that is available if you'd like to put it together ... except somebody has already done that.

Given the depressing news of the week, it seems appropriate to point out that Dr. Saturday has been (was?) on about a four-year quest to explain to people that recruiting rankings actually do* matter. This quest started on Sunday Morning Quarterback with some relatively basic analysis and has been carried over to Yahoo with a lot more numbers that just back up the original numbers.

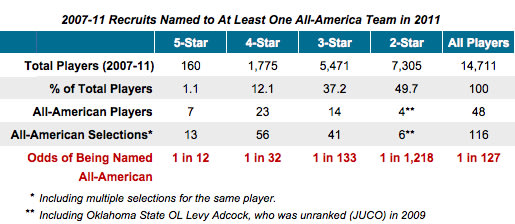

I'm gonna steal a couple charts here to establish a few things going forward. The first is a breakdown of All-Americans by star rankings along with their corresponding odds of becoming an All-American:

Explanation:

Explanation:Maybe a raw ratio of 1 in 12 — or even 1 in 10, or whatever the "adjusted" number is after accounting for the early departures, injuries and academics that these numbers make no attempt to reflect — isn't all that impressive by itself. After all, that means far more elite recruits are falling short of their star-studded birthright than are reaching it. Across the board, failure and mediocrity are the norm, but if you think of a four or five-star player as a guy who is supposed to become an All-American — and a two or three-star guy as someone who is definitely not supposed to become an All-American — then yes, the rankings frequently miss.Upshot: A five-star player is about 11 times more likely to become an All-American than a three-star player and 100 times (!) more likely to become an All-American than a two-star player. The digression isn't just linear but is exponential, which is amazing (lol nerd). Think about that and what it means from an odds standpoint: Talent evaluation is not a complete crapshoot and shouldn't be devalued just because Mike Hart and Kellen Moore turned out to be really good and Ryan Perriloux had no idea how to find his classes. The scouting dudes are pretty good when you zoom out.

On the other hand, if you consider the initial grade as a kind of investment — a projection of the how likely a player is of becoming an elite contributor compared to rest of the field — well, you'd put your money with the "experts" over the chances of finding the proverbial diamond in the rough every time.

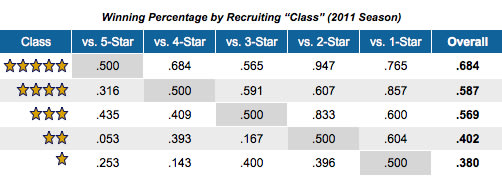

The second relevant chart shows the not-quite-as-easy-to-calculate correlation between team recruiting rankings and wins:

Explanation:

Explanation:... on the final count, the higher-ranked team according to the recruiting rankings won more than two-thirds of the time (68.7 percent of the time, to be exact), and every "class" as a whole had a winning record against every class ranked below it. The gap on the field also widened with the gap in the recruiting scores: At the extremes, "one-star" and "two-star" recruiting teams managed just five wins over "five-star" recruiters — four of them coming at the expense of Florida State and Texas — in 31 tries.FYI, while the chart above is based solely on 2011 games and 2008-11 recruiting classes, the numbers have looked essentially the same every year since Dr. Saturday started doing this thing. That takes care of any questions about whether the 2008 and '09 classes should be weighed more heavily or whatever. The results are the same. I'm actually kinda surprised by the consistency here given that it'd seem like a cumulative collection of recruiting rankings wouldn't necessarily give you an accurate picture of a team and its overall quality, but the numbers are what they are.

It's a simple equation: The better your recruiting rankings by the gurus, the better your chances of winning games, against all classes. Emphasis on the word "chances."

So yeah ... there is a direct, almost-linear correlation between recruiting rankings and both individual awesomeness and team wins. That is not a fluke. These charts/numbers will be cited by me in every argument about recruiting and how the rankings don't matter and blah blah blah. They are not 100 percent accurate but represent a pretty good level of expectation and are only getting better due to more scouts and more camps and more coverage and whatnot.

The one alleged caveat that will always get brought up and therefore should be discussed here: the self-fulfilling-prophecy thing. It's not uncommon for a lower-profile guy who commits to Alabama/USC/Michigan/Ohio State/whoever to get a "he must be better than we thought" rankings bump, thus exacerbating the numerical gap between the best schools and everybody else. But if that were truly a significant factor (and not a negligible one affecting only a couple under-the-radar guys a year), it would mean Alabama/USC/Michigan/Ohio State/whoever would be getting a whole bunch of not-that-great players who were rated highly only because of their destination, and that can't possibly be accurate given the continued and consistent advantage in wins and number of all-whatever players for those programs (see the charts). One last blockquote:

... it’s an argument that’s wrong on its face to the point of absurdity: it suggests that successful programs just are successful, regardless of the players they recruit.Word. The rankings-bump argument doesn't make any sense when you think about the actual results. And I have yet to see any data-inclusive response that presents a coherent counterargument to any of this stuff, which is probably because the data ... like ... yeah. There are limited interpretations.

If the gurus followed the larger schools’ assessments to a fault, if USC was being ranked at the top of the lists simply because it was USC, even if it was signing the same players as UCLA, the Trojans would obviously be closer to UCLA’s results. Substitute "USC" and "UCLA" in that sentence for an overwhelming majority of the possible comparisons between teams who are not close to one another in the rankings.

BTW, I realize this would have been way more timely around Signing Day, but I felt compelled to grab some of this info in response to the recruiting-related pieces I've seen in the last month, all of which included a comments section filled with inane crap that must be refuted. Expect this post again in about 11 months; with the Doc gone, somebody's gotta carry the torch of logic.

*They don't matter as much as coaching. There's obviously a reason Florida State/Miami/whoever massively underachieved while Boise State and West Virginia were winning BCS games; just look at those teams' coaches. That said, accumulating talent is still important in the big picture. Exceptions, rule, etc.

0 comments:

Post a Comment